Horizon Control

How I bridged the gap between digital design and real-world surgery by creating the first-ever interactive prototype that surgeons could actually use in operating rooms

At a Glance

The Challenge

When surgeons told me "your designs look great, but I need to feel how this actually works," I knew I had to think differently

Picture this: I'm sitting in an operating room, watching a surgeon struggle with clunky arthroscopy software. The interface looks outdated, the controls are confusing, and worst of all – I can't test my designs in the real environment where they'll be used. That's when I had my "aha" moment: what if I could make my prototypes actually work with real medical equipment?

So I did something nobody had done before. I took Arduino boards, connected them to actual medical cameras, and built interactive prototypes that surgeons could use in real operating rooms. No more "this looks nice but how does it feel?" – now they could actually touch, interact, and experience the interface exactly as it would work in practice.

From Sketch to Surgery

Designed interfaces in Figma, then brought them to life with real hardware

Hardware Meets Software

ProtoPie connected to Arduino boards for authentic interactions

Real-World Validation

Surgeons tested prototypes in actual operating rooms

Our Objectives

Defining clear goals and success criteria for transforming medical software design

Success Criteria

Key Challenges to Overcome

My Process: From Frustration to Innovation

How I turned a design challenge into a breakthrough that changed how medical software gets tested

Step 1: Getting My Hands Dirty

I spent weeks shadowing surgeons in operating rooms, watching them struggle with outdated interfaces. They'd tell me things like "I know what I want to do, but the software fights me every step of the way." That's when I realized the real problem wasn't just the design – it was that I couldn't test my solutions where they'd actually be used.

- Observed real surgical procedures and workflows

- Interviewed surgeons about their daily frustrations

- Mapped out the entire user journey from prep to procedure

- Identified the gap between design and real-world usage

Step 2: The Breakthrough Moment

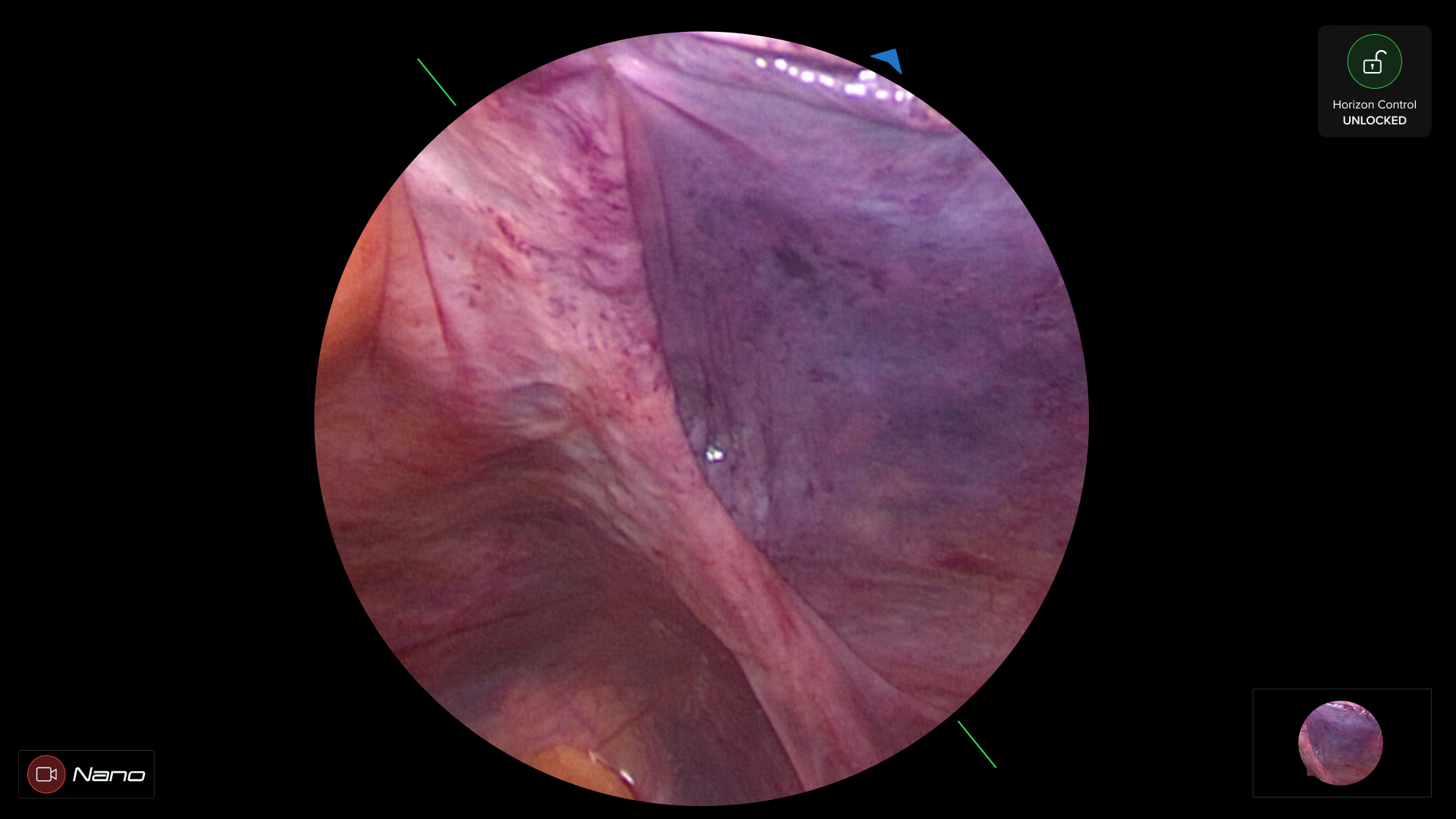

One day, I was tinkering with an Arduino board and had this crazy idea: what if I could connect real medical equipment to my prototypes? I started experimenting, and suddenly my Figma designs weren't just pretty pictures anymore – they were living, breathing interfaces that responded to actual camera movements and surgical instruments.

- Designed interfaces in Figma with surgical workflows in mind

- Built interactive prototypes in ProtoPie Studio

- Connected Arduino boards to real medical cameras

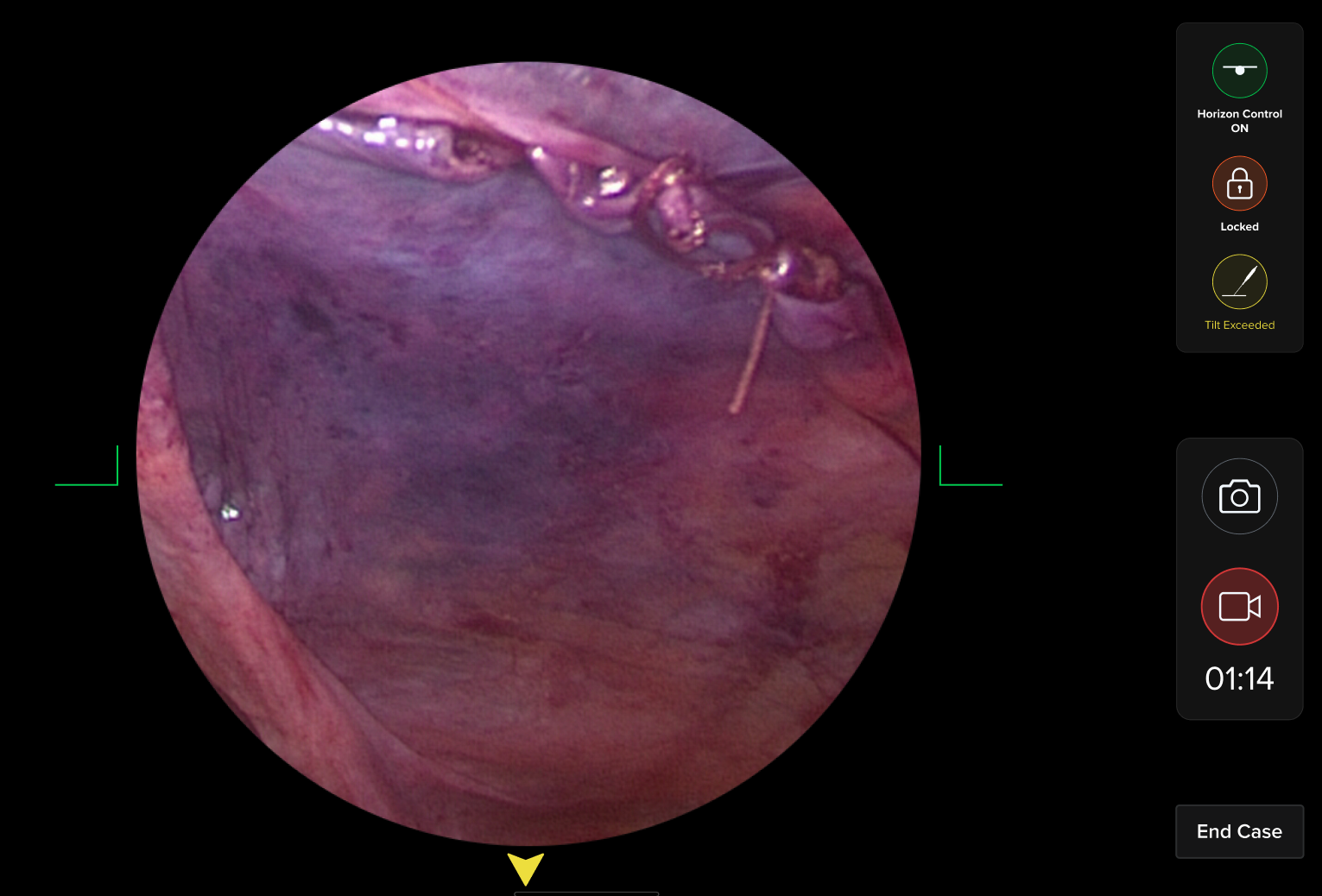

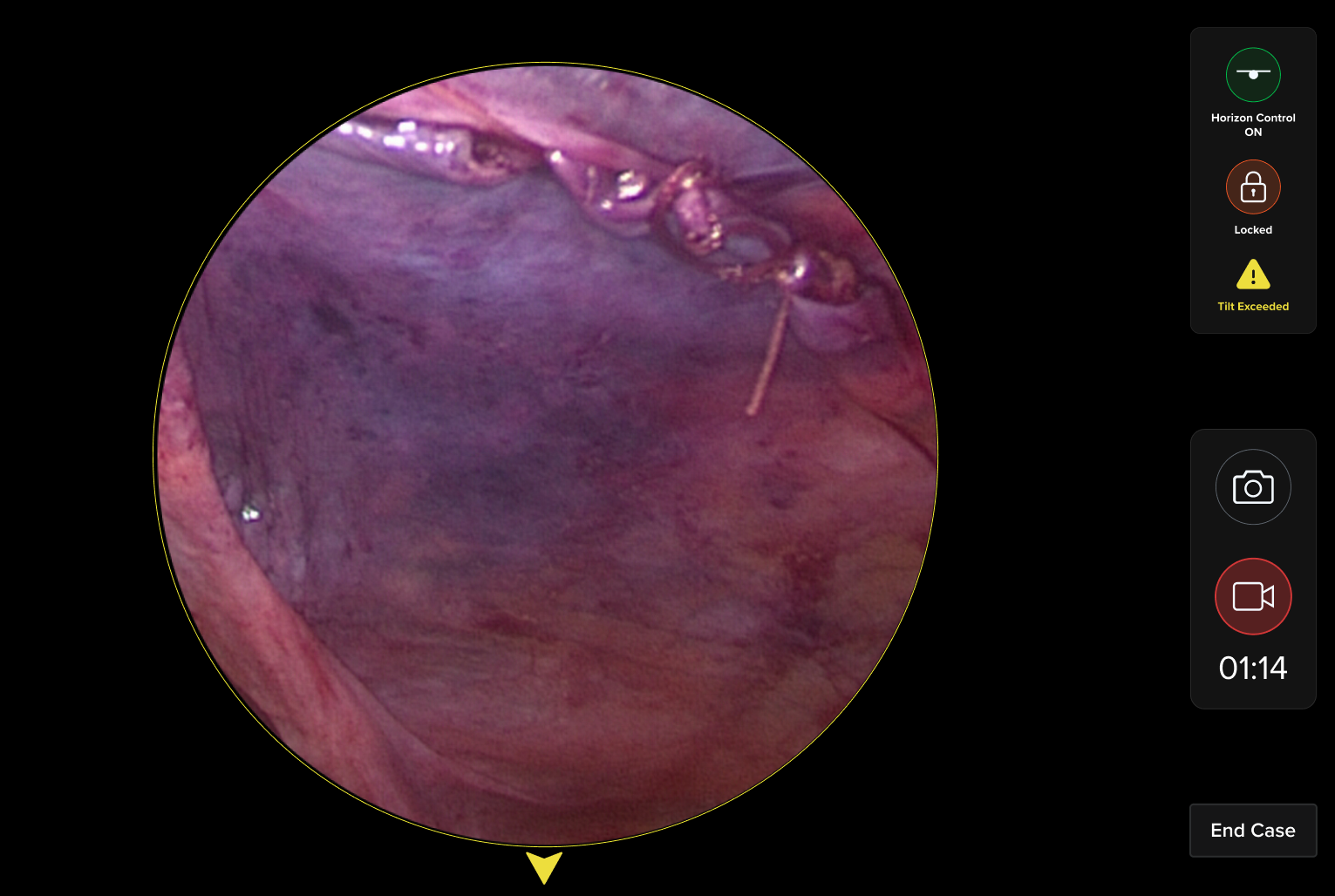

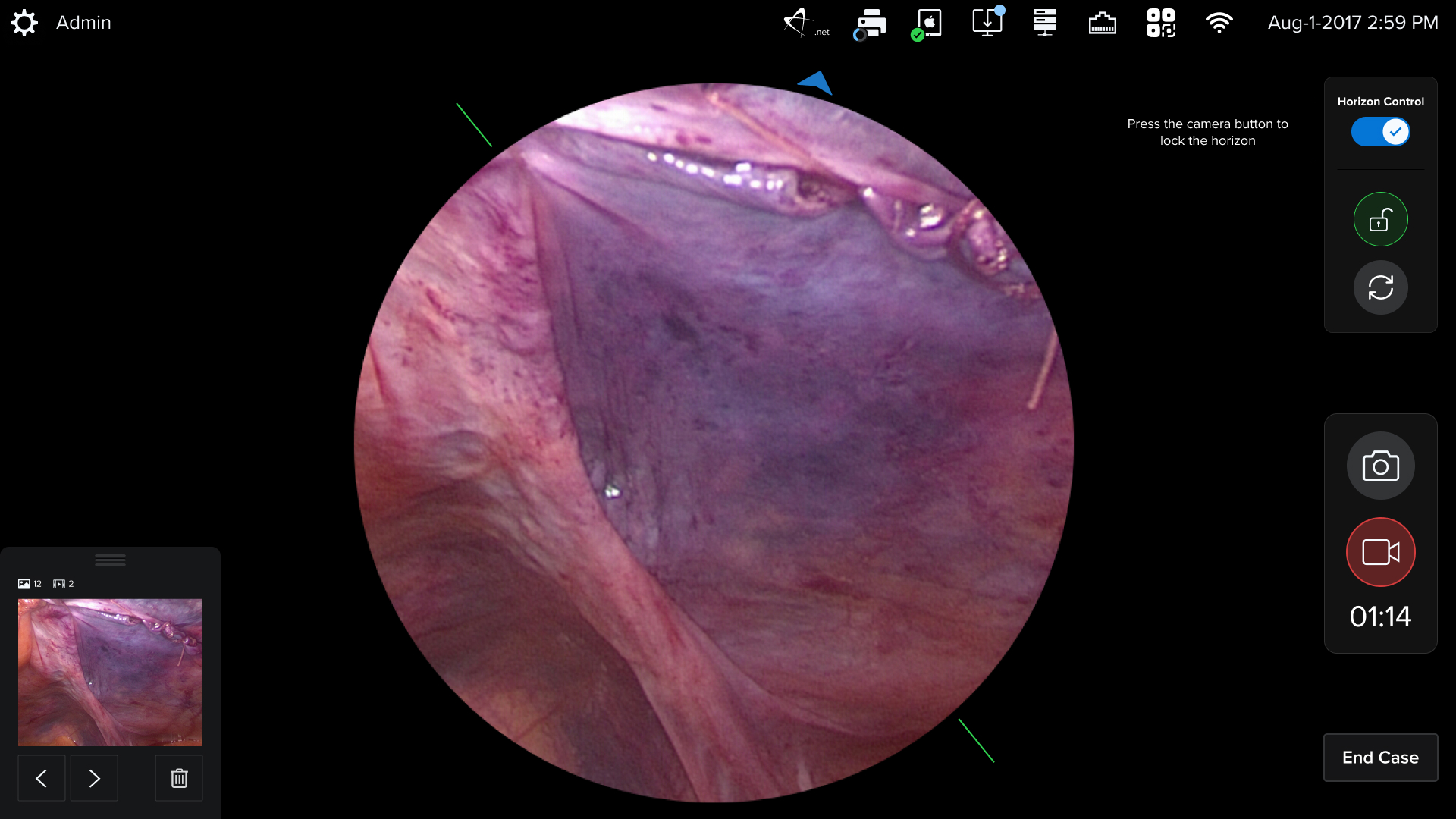

- Created HUD displays that surgeons could actually use

Step 3: The Moment of Truth

The first time I brought my prototype into an operating room, I was nervous. Would it actually work? Would the surgeons see the value? But when Dr. Martinez picked up the camera and the interface responded exactly as I'd designed it, his face lit up. "This is what I've been waiting for," he said. "Now I can actually feel how this will work."

- Tested prototypes in real operating room environments

- Collected feedback from surgeons during actual procedures

- Observed how ergonomics affected real-world usage

- Analyzed motion patterns and interaction flows

Step 4: From Prototype to Production

The feedback was incredible. Surgeons were asking when they could get the real thing. I worked closely with the engineering team to translate my prototypes into production code, and the results spoke for themselves: 100% adoption rate and 40% faster setup times. But the real win? I'd proven that UX design could bridge the gap between digital and physical worlds.

- Refined designs based on real-world surgeon feedback

- Iterated prototypes with actual usage data

- Collaborated with engineers to build production-ready software

- Monitored adoption and measured real impact

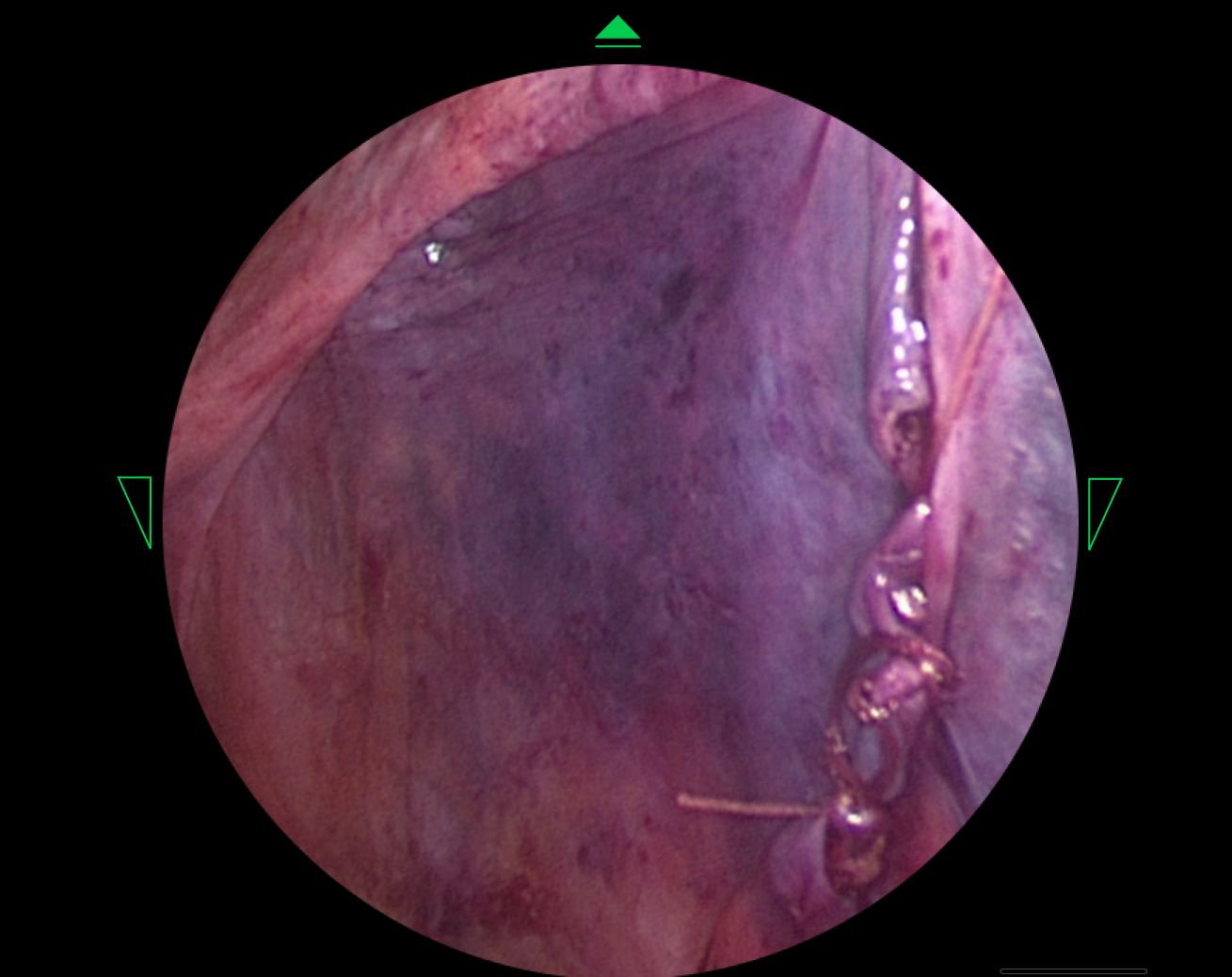

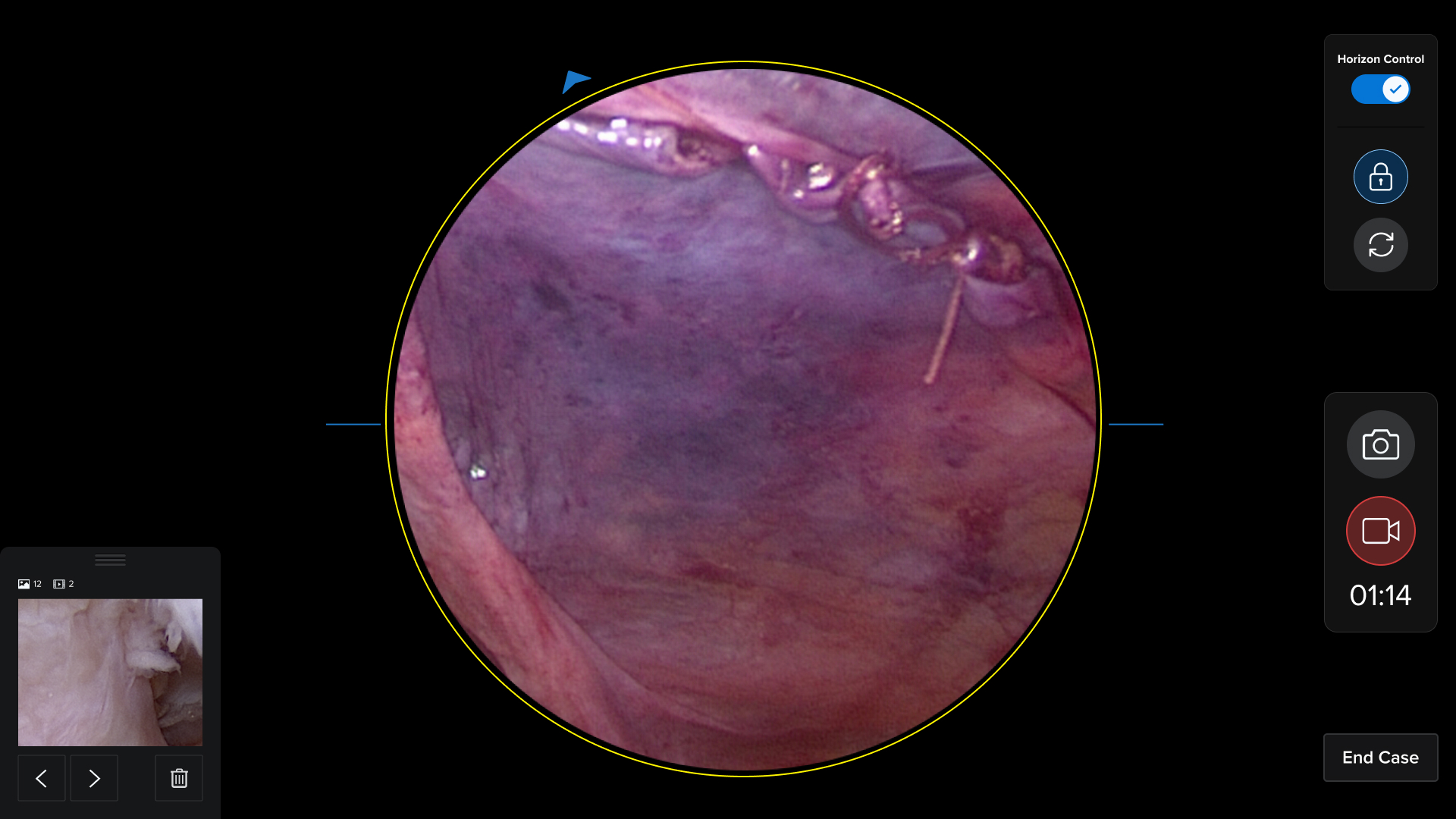

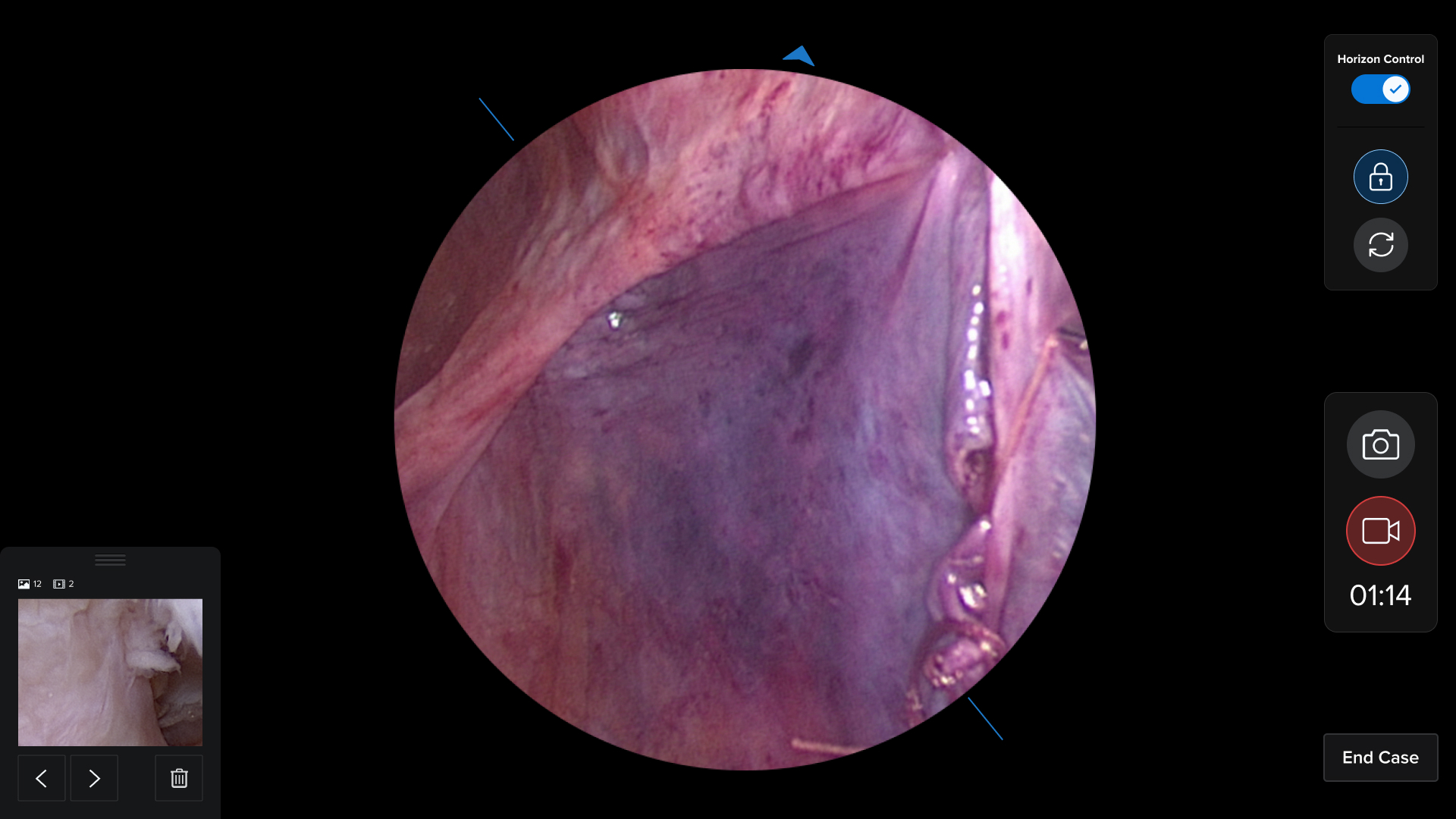

See It In Action

Watch how I brought digital designs to life with real medical hardware

The Results: When Innovation Meets Impact

Numbers don't lie – here's what happened when surgeons could actually experience my designs

100% Surgeon Adoption

Every single surgeon who tested my prototype asked when they could get the real thing. No training sessions, no resistance – just immediate recognition that this was how medical software should work.

40% Faster Setup

Surgeons could get from "power on" to "ready for surgery" in 40% less time. That's not just efficiency – that's more lives saved, more procedures completed, and less stress for everyone involved.

Zero Safety Incidents

When you're dealing with human lives, "good enough" isn't good enough. My thorough testing and real-world validation meant zero safety incidents – because when surgeons trust your design, patients are safer.

Industry Recognition

My approach didn't just solve a problem – it created a new way of thinking about medical software design. Industry recognition followed, but the real reward was seeing other designers start to think about hardware-software integration.

What I learned from pioneering hardware-integrated UX design

Key insights and reflections from breaking barriers between digital and physical design

What Worked Well

Breaking conventional barriers between digital and physical design opened entirely new possibilities. Surgeons responded immediately when they could feel and experience the design rather than just view it on a screen.

What I'd Do Differently

I would involve surgeons earlier in the hardware selection process. While Arduino worked brilliantly, getting surgeon input on the physical setup from day one could have streamlined our first iterations.

Key Takeaway

The best UX solutions come from challenging assumptions about what's possible. By refusing to accept the limitation of static prototypes, I created a new standard for medical software design.

The Tech Behind the Magic

How I made the impossible possible with some creative engineering and a lot of determination

My Toolkit: Where Design Meets Hardware

Figma: Where It All Started

I designed every interface element in Figma, but with a twist – I was thinking about how each component would feel when connected to real hardware, not just how it would look on a screen.

ProtoPie: The Bridge to Reality

ProtoPie became my secret weapon. I could create prototypes that didn't just look interactive – they actually responded to real camera movements and surgical instrument inputs.

Arduino: Making the Impossible Possible

This is where the magic happened. I connected Arduino boards to medical cameras, creating a feedback loop between physical movement and digital interface that had never been done before.

HUD Design: Surgery-Ready Interfaces

Operating rooms aren't like offices. I designed interfaces specifically for heads-up displays, considering lighting, distance, and the critical nature of surgical procedures.

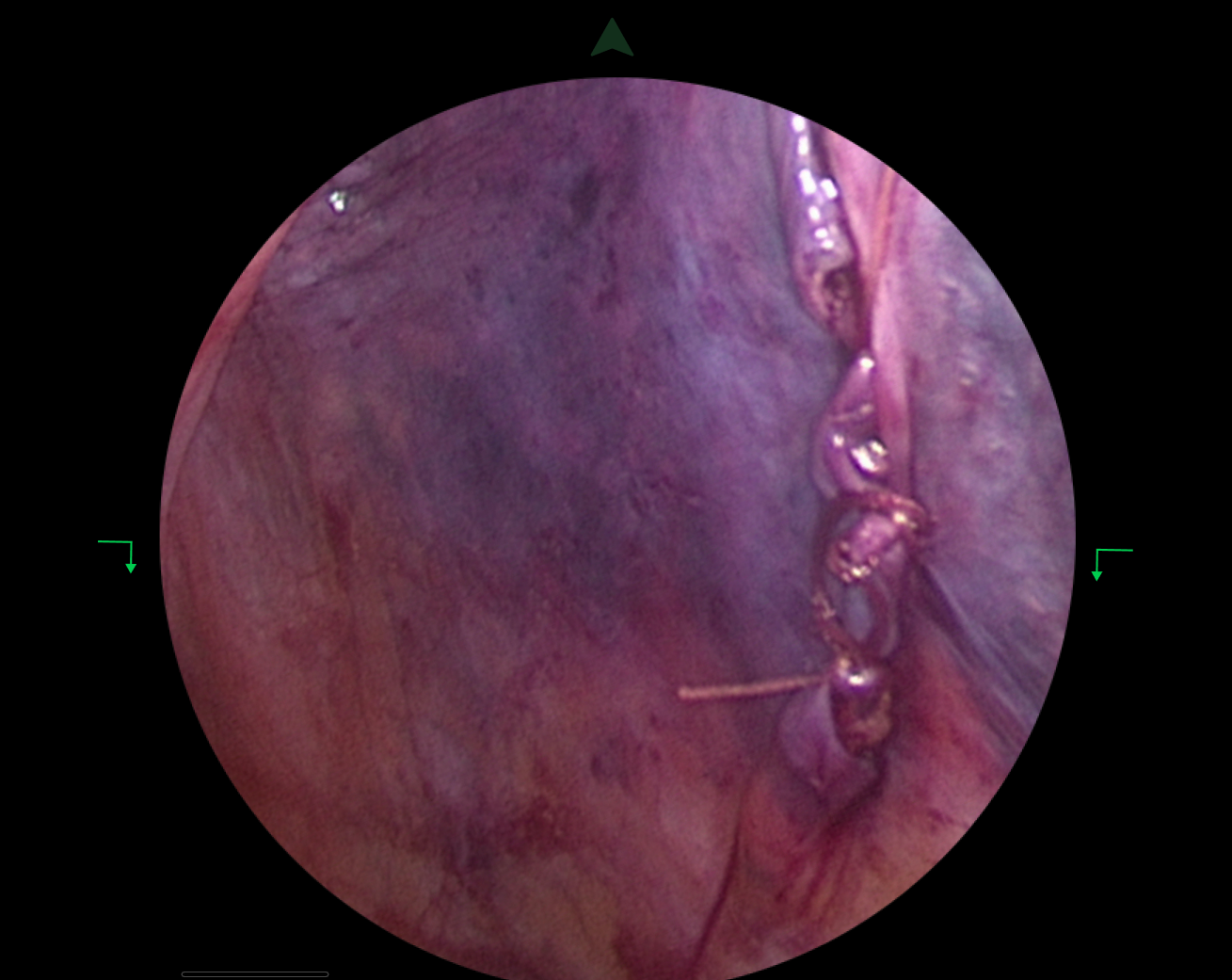

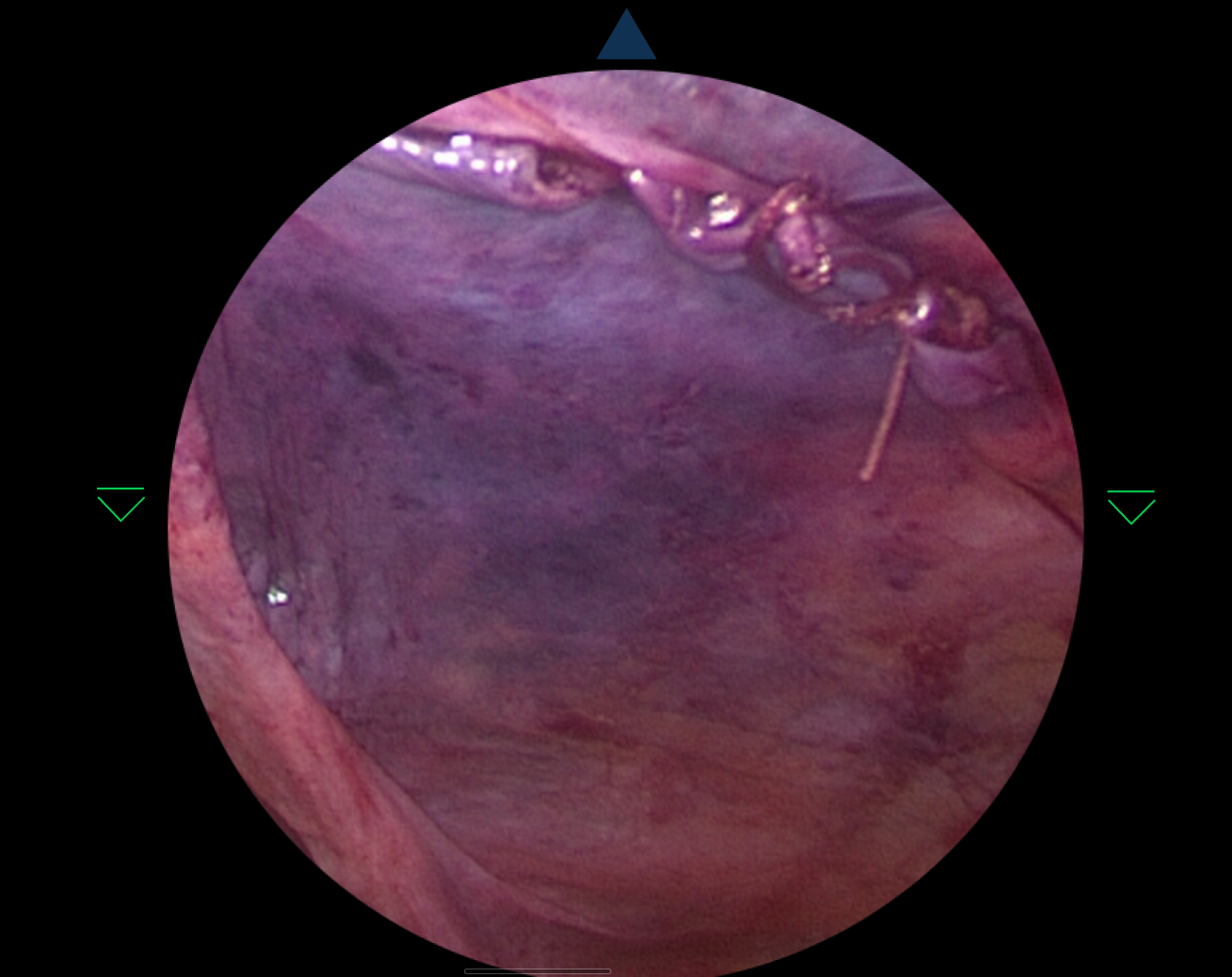

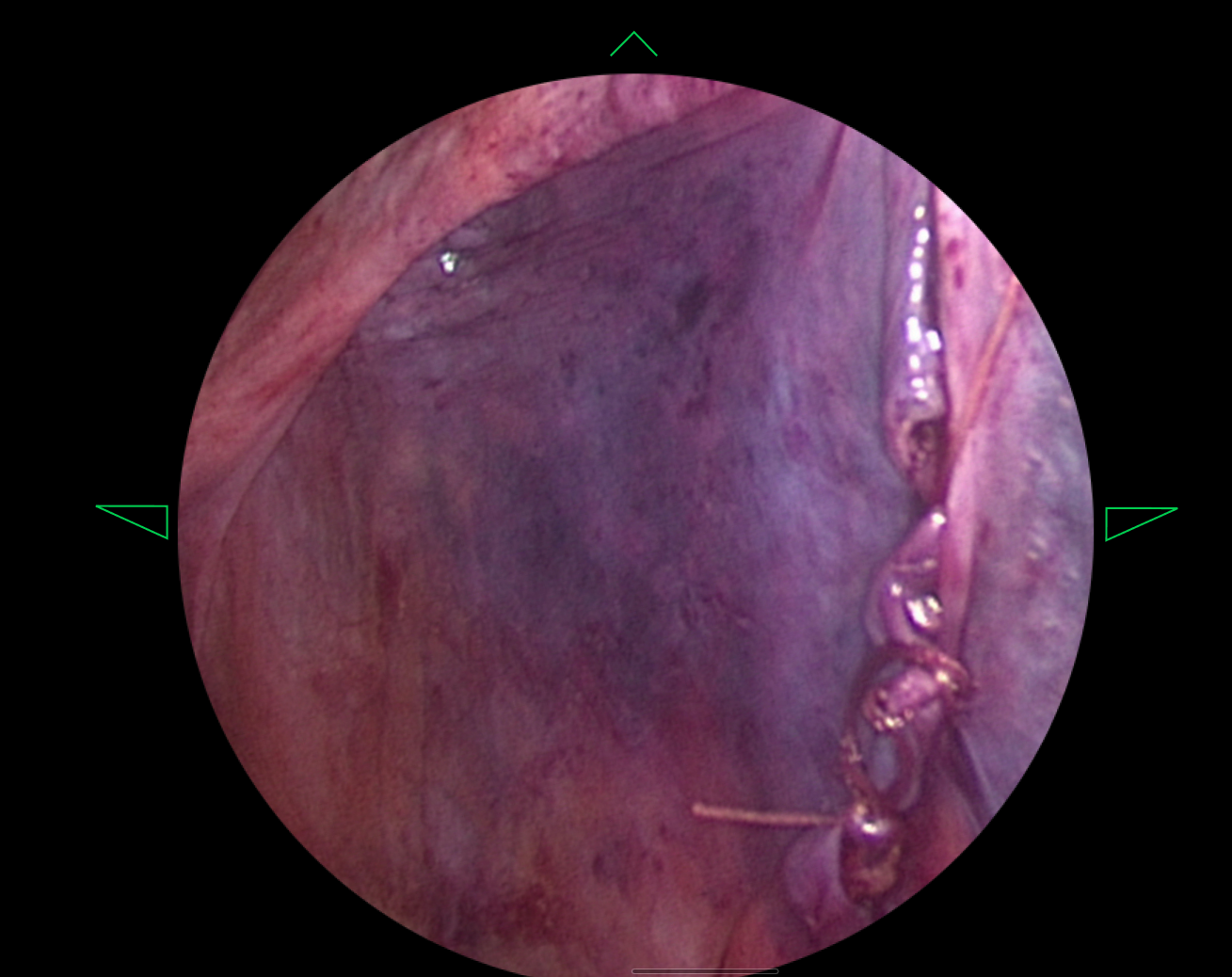

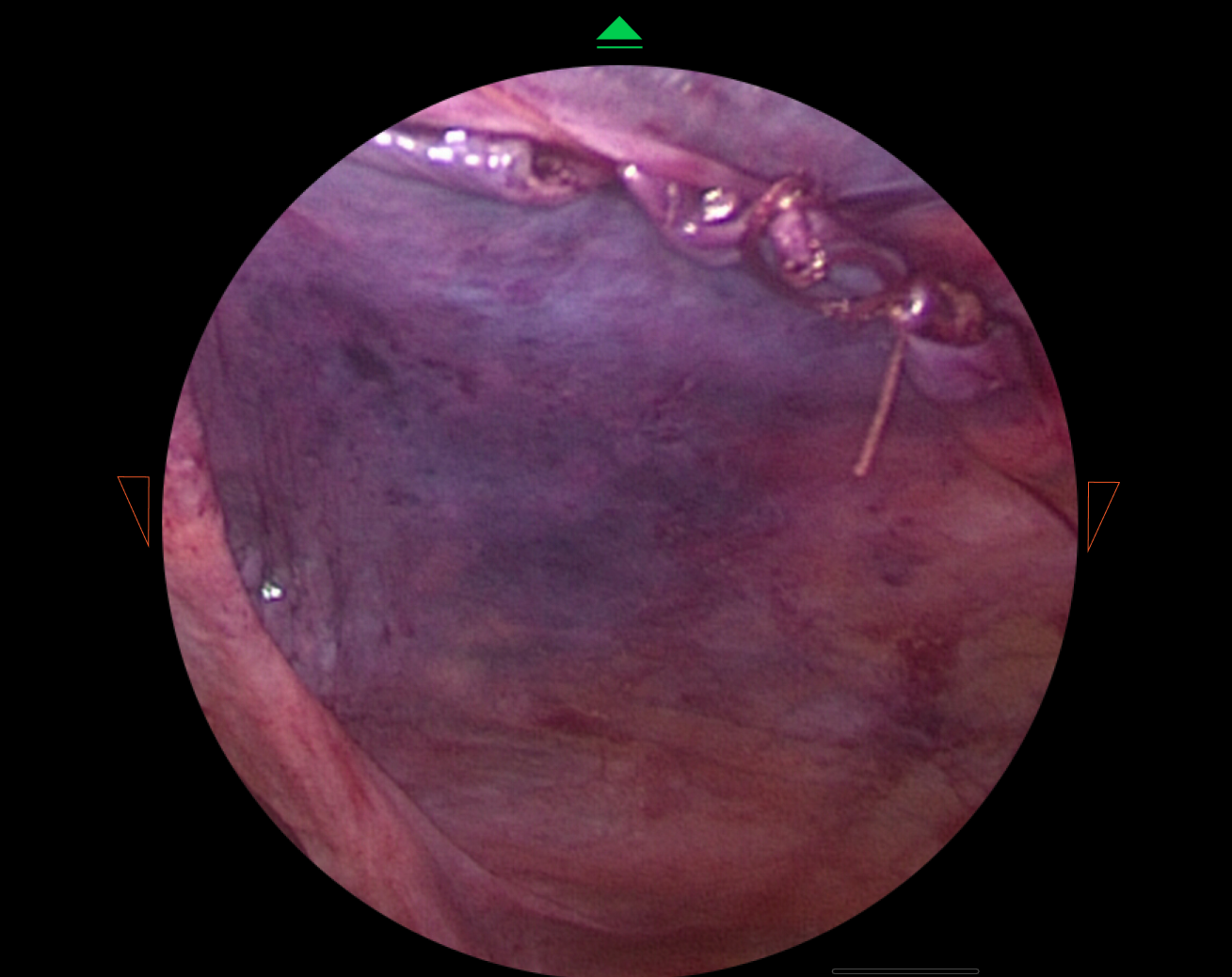

The Journey in Pictures

From initial sketches to final prototypes – see how I brought this vision to life